Docker Push over 4G Bandwidth

Docker push can eat all your 4g data, this will show you how to prevent that.

Whilst working remotely from up north, I have had a few times were I needed to push a new docker image to a remote registry. This is something you normally take for granted over a wired or wireless LAN connection. However when using a mobile 4G connection, the experience is completely different.

Speed

The first thing that I noticed was the speed of pushing. As the 4G bandwidth is much more limited, I encountered issues with bottlenecks when multiple image layers were being pushed.

This resulted in docker locking up at times and failed pushes.

To solve this I set the max docker daemon count to 1, in the file /etc/docker/daemon.json

{

"max-concurrent-uploads": 1

}Making this process serialized meant my 4G connection wouldn't be hammered as much and ensured a more reliable push.

Note: you should revert this when back on a larger bandwidth connection.

Image Size

Where permitted, it helps to shrink the size of the image, using Alpine linux over Debian, netted me savings of around 400mb.

The right answer!

The two steps above helped, however the next issue I faced was that every push was eating into my data allowance (70-100mb) per push, with several happening each day.

For an infrequent task, it wouldn't be such an issue however spending large periods of time in a remote location whilst working means that a new solution had to be found.

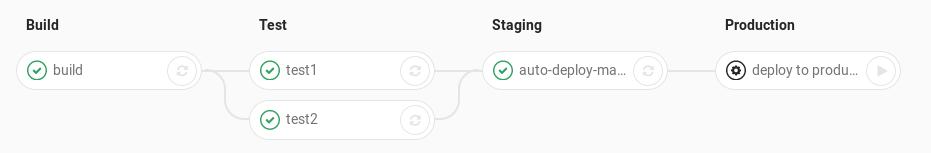

The solution came with my Gitlab repository, which supports integration CI pipelines.

Rather than push docker images to a remote server, I setup the repository to build and test the codebase and then push the image to environments.

This shrunk the data usage from megabytes to kilobytes!

If you are not using Gitlab, then Circle CI can be configured to listen to a Github. Otherwise a good old Jenkins box can do the trick too!